It has been argued that dissimilarities are potentially be a good alternative for features. How to build a good representation will be discussed later. Here the question will be faced: what is a good measure? What type of measurement device should be used? What properties do we demand?

It has been argued that dissimilarities are potentially be a good alternative for features. How to build a good representation will be discussed later. Here the question will be faced: what is a good measure? What type of measurement device should be used? What properties do we demand?

If features are given or can be defined they can be used to define a distance measure between objects. This can be understood as the euclidean distance in a properly scaled feature space. For good features this will also result in good dissimilarities. However, as long a dissimilarities are based on features their performance will be determined by the quality of these.

No class overlap

An important defect of features has been mentioned before. As features do not describe the full objects it is possible that two objects that are different have a zero distance based on the available features. This is an essential cause of class overlap in feature spaces. Dissimilarities offer the possibility to overcome this. If the dissimilarity measure is defined in such a way that objects have a zero distance to itself or to entirely identical copies of themselves (which thereby should belong to the same class) there is no class overlap.

Class consistency

Additional conditions to use this for generalization are that small object deviations should result in small dissimilarities and that if these dissimilarities are sufficiently small, objects belong to the same class. Every object has thereby a small neighborhood of other objects of their own class. If there is a non-zero probability to encounter an object in such a neighborhood then this approach will be consistent. By this is meant that asymptotically (for increasingly large training set sizes) an arbitrary object to be classified has as a nearest neighbor an object of the same class.Phrased differently: for sufficiently small neighborhoods of every object the probability to find an object of a different class is zero and to find an object of the same class is non-zero. We will call this property class consistency.

These conditions are easy to fulfill for objects that can be fully described by shape: images, time signals and spectra. For sufficiently dense samplings many dissimilarity measures can be defined that fulfill them, e.g. all Minkowsky distances, the Hausdorff distance, the earth mover distance, dynamic time warping and many other shape distance measures. There are some additional properties that may be helpful.

Dissimilarities versus distances

It is very common to define dissimilarity measures that are non-negative. It is however not essential. It is not a problem for the class consistency if the dissimilarity of an object to itself is negative, as long a it is the smallest distance possible for that object. It is in such a situation still possible that all objects with sufficiently small dissimilarities (objects that are most similar to the object under consideration) are of the same class.

Not all dissimilarity measures are symmetric. The dissimilarity as measured between the objects A and B may be different from the dissimilarity as measured between B and A. Examples are the Hausdorff distance and the Kullback Leibler divergence. Often a symmetric measure is constructed by averaging or by using the minimum or the maximum of the two values. This may result in a loss of information. How severe this may be and how this loss can be avoided is still an area of research.

A popular property of dissimilarities is whether the triangle inequality is satisfied. This means that for any two objects the dissimilarity measures directly between them is not larger than the dissimilarity found for a detour taken over a third object. Dissimilarity measures that satisfy this condition and that are symmetric, non-negative and only zero for the dissimilarity of an object with itself are called metric. In the mathematical literature metric dissimilarities are called distances. That is why the word dissimilarity is used here as it refers to a lousy, non-proper distance measure.

For pattern recognition purposes the metric property is not so significant. It is of much more interest whether the measure is class consistent, as discussed above, or whether it is euclidean, as will be discussed below. The first guarantees well separable classes and the latter is good for constructing a representation in generalization is well possible.

Euclidean dissimilarities

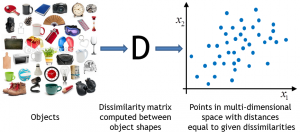

If the dissimilarities between a set of objects can be understood as the euclidean distances between an equally large set of points in a vector space than the dissimilarity measure is called euclidean. If this is the case the construction of such a space, called embedding, may result in a representation that is well suited for generalization. All traditional tools developed in multi-variate statistics and machine learning can be used for this representation to build good classifiers.

Also in case a dissimilarity measure is just approximately euclidean this can be a useful procedure. Like in removing asymmetry, however, this may also result into a loss of information. It is a main target of the research on dissimilarity representations to understand for what applications and for what measures this is the case.

Filed under: PR System • Representation