Is the neural network model good for pattern recognition? Or, is it too complex, too vague, too clumsy to be of any use in performing applications or in building understanding?

The relation between the pattern recognition community and these questions have always been very sensitive. Its history is also interesting for observing how science may proceed. It shows clear examples of the refutation of hypotheses by a single counter example as well as paradigm shifts inside a scientific community.

How science proceeds

According to Karl Popper it is essential for a scientific theory that it is falsifiable. It should be possible to make an observation that contradicts the theory. A theory that concludes the “all swans are white” is refuted by the observation of a black swan. Sciences such as history and psycho-analysis that cannot be refuted by such an observation are not true sciences in the Popper sense.

According to Karl Popper it is essential for a scientific theory that it is falsifiable. It should be possible to make an observation that contradicts the theory. A theory that concludes the “all swans are white” is refuted by the observation of a black swan. Sciences such as history and psycho-analysis that cannot be refuted by such an observation are not true sciences in the Popper sense.

After a theory has been refuted the human imagination and creativity should construct a new theory, i.e. a new set of hypotheses, that is in correspondence with all observations made so far. This is in contrast with the traditional classical inductivism that states that scientific truth can be derived from observations.

Thomas Kuhn observed that this is certainly not how it works in practice. Scientific theories are hardly ever abandoned by a single counter example. In scientific communities it is the tendency to continue with an established theory even if observations are reported that cannot be explained by it. By social and political pressure such observations are questioned, neglected or put aside for later verification and explanation. One should not demolish a castle in which many careers are invested by a single experiment that may be performed badly or put in the wrong context.

Thomas Kuhn observed that this is certainly not how it works in practice. Scientific theories are hardly ever abandoned by a single counter example. In scientific communities it is the tendency to continue with an established theory even if observations are reported that cannot be explained by it. By social and political pressure such observations are questioned, neglected or put aside for later verification and explanation. One should not demolish a castle in which many careers are invested by a single experiment that may be performed badly or put in the wrong context.

Such an established theory that constitutes the basis of many research studies and scientific meetings is called a paradigm. At some moment it may become unavoidable, due to many contradicting reports, for this paradigm to be given up and replaced by something new. The paradigm shift is awaiting.

Any researcher who is or wants to be active in a scientific community, e.g. by publishing research papers or presenting his work at conferences, and especially if he wants to write project proposals, is encouraged to read Kuhn’s controversial 1962 book The Structure of Scientific Revolutions. It may help to understand why some good papers are rejected, while the middle-of-the-road project proposals using the fashionable terminology are accepted.

Neural networks and pattern recognition

Pattern recognition is mainly an engineering field. There are, however, underlying scientific theories. One is that learning from examples is done by human beings, by young children and to some extend even by animals. The basis for this ability is the nervous system and especially the brain. So if we want to construct systems that can learn, why not start by modelling neurons?

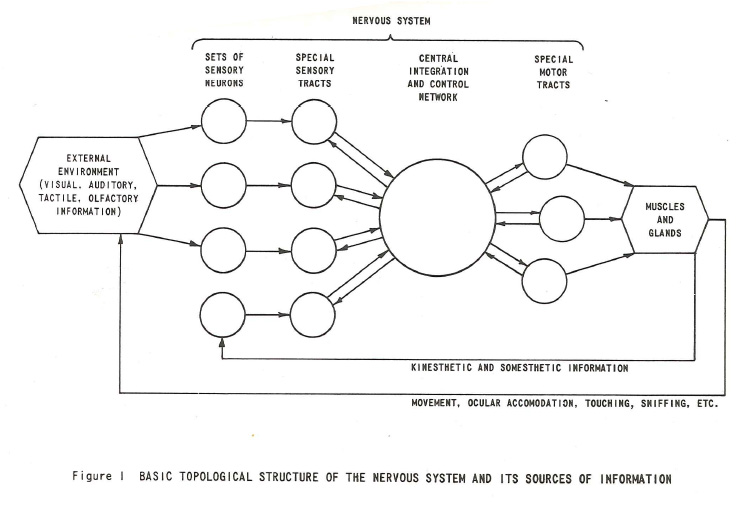

The above picture is figure 1 of the book on neurodynamics by Frank Rosenblatt published in 1962. He tried to model the nervous system in such a way that it could be applied to real data. It consists of layers of connected Threshold Logic Units (TLUs). They have a weighted input and a thresholded output, thereby simulating a single neuron. Rosenblatt named it perceptron. His research raised a lot of enthusiasm and inspiration.

Later in the 60s Nilsson published his famous book on Learning Machines. It is a more formal, mathematical study, showing the possibilities and difficulties of multilayer networks based on TLUs. In short, possibilities are equally large as the difficulties to train them. In the same period other pattern classifiers were studied, linear as well as non-linear ones, based on density estimations or on object distances and potential functions (later called kernels). These were much better trainable.

Around 1970 a very remarkable event happened. Minsky and Papert showed very clearly the limitations of perceptrons. A seemingly very simple problem appeared not to be solvable by a linear perceptron: does a binary image show a single blob or two or more disconnected blobs? They proved that this problem based on local features is equivalent to the XOR problem which has no linear solution. Non-linear solution have to be found by multilayer perceptrons. They are not only difficult to train, but raised the danger of peaking (overtraining) of which researchers have gradually become aware.

Paradigm shift

The facts that simple problems could not be solved by a single linear perceptron and that multilayer perceptrons were difficult to train were for many researchers the counter example against the hypothesis that the learning capability of the nervous system could be well simulated by multilayer perceptrons. It caused a paradigm shift in the fields of artificial intelligence (causing a so-called AI winter) and even stronger in pattern recognition. The latter field had good alternatives for trainable pattern classifiers. Engineers were thereby glad to have good reasons to avoid the complicated field of perceptrons.

It has lasted for almost 25 years before the multilayer perceptron, reincarnated as the Artificial Neural Network (ANN) and was allowed to re-enter the field. All that time people presenting studies about this topic were critically questioned: didn’t you read Minsky and Papert? Are you not aware of the peaking phenomenon? Another paradigm shift was needed to allow neural networks in the pattern recognition toolboxes. How this happened will be the topic of another post.

Filed under: Classification • Overtraining • PR System