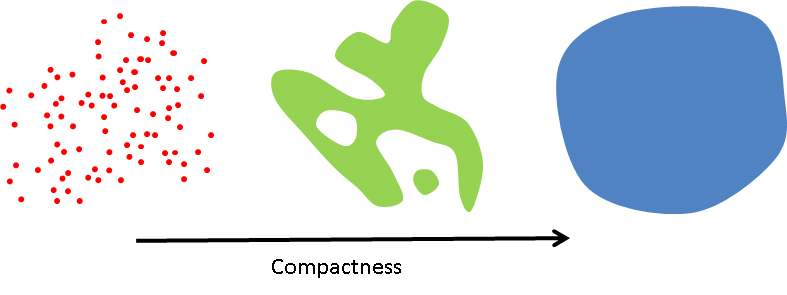

Around 1965 the the concept of compactness was discussed in the Russian pattern recognition literature [1]. It has been almost entirely neglected by the authors of the textbooks published in the West. There is however a strong relation with the careful creation of a good representation as advocated by us. Here we will describe the concept of compactness and argue that the representation of objects in a pattern recognition system should be preferably such that each of the classes is compact.

Classes in pattern recognition problems are often compact …

In the previous post we already formulated two important rules for a good representation:

- Every real world difference between objects that may play a role in the human judgement of their similarity should make a difference in the representation.

- The representation of a real world objects, i.e. the mapping from the object to its representation, should be continuous.

For most pattern recognition applications holds that a minor modification of the original objects does not change their class. If we modify a chair slightly, it will not become a table. Objects to be classified can be thought of as modifications of the example objects in the training set. If the following representation is continuous then this will result in connected class domains. The representations of all objects in a class may result in a single large domain or in a set of unconnected sub-domains in case no path exist between some objects of the same class that remains inside the class. For many problems the number of subdomains if finite, if not one.

… and thereby it works

If the above is satisfied then the set of objects from the same class is compact*. This means that by randomly sampling the class with a growing set of objects (e.g. the training set) their representations will become arbitrarily close to any given object (e.g. an object to be classified). If this holds for all classes and probability density functions in the representation space behave sufficiently well, a large training set will contain objects that just slightly differ from the objects to be classified. Consequently at least the nearest neighbor classifier will perform well.

Also most other classifiers, in particular the ones that optimize in some geometric way a decision function in the representation space, will profit from the compactness. Implicitely it is in classifier design assumed that the classes are compact, sometimes called the compactness hypothesis.

Our conclusion: good representations are compact. If the real world object classes are compact it is sufficient that the representation is continuous.

* The definition of compactness used here is different from the one used in set theory.

References

[1] A.G. Arkedev and E.M. Braverman, Computers and Pattern Recognition, Thompson, Washington, D.C., 1966.

[2] R.P.W. Duin, Compactness and Complexity of Pattern Recognition Problems, in: C. Perneel (eds.), Proc. Int. Symposium on Pattern Recognition “In Memoriam Pierre Devijver” (Brussels, B, Feb.12), Royal Military Academy, Brussels, 1999, 124-128.

Filed under: Foundation • PR System • Representation