The pixel representation in its broadest sense samples the objects and uses them to build a vector space. If the sampling is sufficiently dense, it covers everything. How can we loose information? What is wrong?

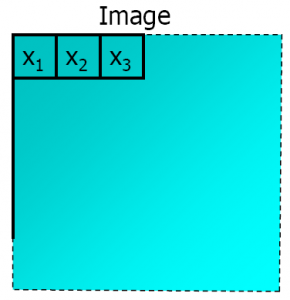

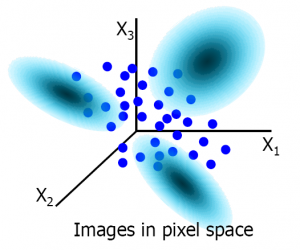

Let us take an image and sample it as on the left above. The pixels can now be ordered as a long vector with their grey values giving rise to a numerical vector. The length of this vector and, thereby, the dimension of the pixel space is equal to the number of pixels in the image. Different images can only be represented in the same space if they are sampled with the same number of pixels. In the right plot above the first three dimensions of the pixel space is shown. The dots there are vectors in the 3-dimensional space (build by the first three pixels) representing a set of images.

The pixels x1 and x2 are neighboring pixels in the image. This also holds for the pixels x2 and x3. The pixels x1 and x3 however are not neighbors. This is not directly visible in the pixel space. The relations between the axes x1, x2 and x3 are similar: they are perpendicular. The pixel representation itself does not show neither store which pixels are neighboring and which are not. Only if a number of images is represented in the space, by which a cloud of vectors is constructed, the lost relations between the pixels can be reconstructed by computing the correlations between the axes. To do this a set of images is necessary. Thanks to multiple observations the lost information might be reconstructed.

If just the pixel representation is given, without the positions of the pixels in the image, it would be very difficult to find out which axis belongs to which pixel. The correlation matrix is very large (e.g. 65000 x 65000 for small images of just 256×256) and many example images would be needed for a proper reconstruction.

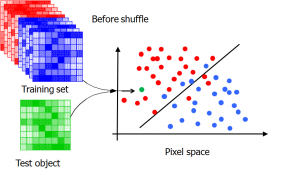

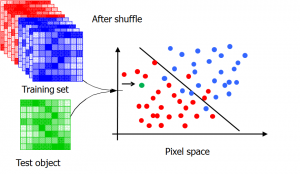

Another example that shows that the pixel representation does not use the connectivity pattern of the pixels in the image is illustrated by the above thought experiment. Left shows the original situation: a set of training images, their configuration in the pixel space, a classifier found for this training set and the representation of a test image. Let us now randomly shuffle all pixels in all images in the same way. By this process their connectivity pattern is lost (randomly changed). What happens in the pixel space? Just a set of rotations! The relative configuration of all objects, training images as well as test images, remains the same. Most classifiers are rotation insensitive: they classify all objects in the same way after the rotation. So the classification of a test object is not affected by the random shuffling of the pixels.

This thought experiment illustrates that pixels based classifiers do not use the spatial connectivity of the pixels in the images. In fact, the images are teared into pieces, they are thrown on a heap of isolated pixels and this heap is used to build a classifier. Since any neglection of useful knowledge should be paid by more observations these pixel representations can only be used for large collections of images. Better representations are needed if classifiers have to be constructed from small sets of training data.

Filed under: PR System • Representation