Imagine a two-class problem represented by 100 training objects in a 100-dimensional feature (vector) space. If the objects are in general position (not by accident in a low-dimensional subspace) then they still fit perfectly in a 99-dimensional subspace. This is a ‘plane’, formally a hyperplane, in the 100-dimensional feature space. We will argue that this hyperplane can be easily transformed into a linear decision function that perfectly separates the classes A and B.

Consider a minor rotation of the plane. This can be done such that a particular training object of the class A is not anymore in the plane but lies outside it, on the A-side. For each of the other 99 objects, either belonging to class A or B, a similar rotation (or a small translation) can be found as there are sufficient degrees of freedom to realize this. Consequently, the set of 100 objects is linearly separable.

So any set of two-class objects in general position with a size equal or less than the dimensionality of its feature space is linearly separable. This is independent of the way they are labeled. Will this plane be a good classifier? It can always be constructed, even if the objects are labeled at random. Thereby, there is no reason why it should generalize and be able to predict the labels of new incoming objects. Consequently, feature spaces of a dimensionality larger than the size of the training set should be avoided. Classifiers built in such space are not expected to generalize.

A great paper by Cover

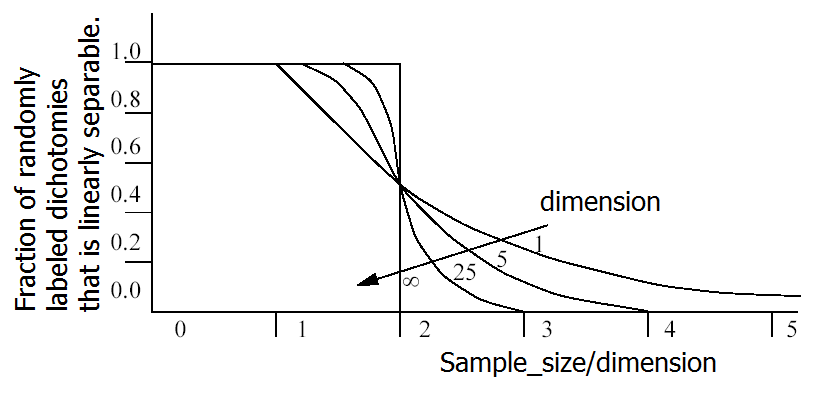

The above is an intuitive reasoning which is much better mathematically formulated in a great paper by Cover. For the starting student it is a pleasure to read. It is also very inspiring for the further study of the field. It shows that if the sample_size is smaller than the dimension all possible labelings in a two-class problem are linearly separable. If the sample_size is at most twice the dimensionality then still 50% of the possible labelings are linearly separable; see the below figure for the complete curves.

Cover even generalized this from linear to polynomial classifiers. This plot gave rise to the rule of thumb to reduce the number of features to at most half of the sample size before computing a classifier. This has been in use for many years in the field of pattern recognition.

There is something wrong

I have told the above story during 25 years to hundreds of students to support the statement that high-dimensional spaces, where high is meant relative to the sample size, should be avoided. After some point more feature are useless, or even worse, counterproductive. Nobody has ever complained.There is some convincing beauty in the mathematics, one can say 😉

The statement however is wrong, so I stopped sharing this explanation.

There is nothing wrong with the curves and the mathematics they describe. What is wrong is the interpretation for pattern recognition. This became clear when more and more classifiers were proposed that gave good results in various applications for situations that did not obey this rule. Examples are neural network classifiers and support vector machines.

Why is Cover’s reasoning not applicable to almost any pattern recognition application?

It is true that the dimensionality problems exist, but problems as indicated above do not raise in practice as severe as shown and certainly not for an arbitrary classifier. The point is that in real world pattern recognition problems the object labeling is not random but usually makes sense. The selection of the problem and its representation are based on human prior knowledge by which almost all bad labelings are ruled out. Moreover, almost always the compactness demand naturally holds: similar objects tend to belong to the same class and tend to be represented nearby in the representation. Configurations which may be resulting from random labelings are in practice very improbable.

The Vapnik-Chervonenkis complexity

Cover’s reasoning is a special case of the complexity study as performed by Vapnik. It is beautiful and a tempting challenge to understand. It results in the Vapnik-Chervonenkis (VC) dimension, a measure for classifier complexity. This yields bounds for the classification error as a function of the sample size. These bounds are however very pessimistic: huge sample size are necessary to guarantee (in probability) some performance.

The problem with this theory is the same as with the Cover’s study. It is based on the assumption that all possible labelings are equally probable. This does not hold in practice at all and it becomes even more unlikely for large sample sizes. Beautiful theories, mathematically elegant, but almost of no use to pattern recognition.

Filed under: Classification • Evaluation • History • Overtraining • PR System • Representation