The field of pattern recognition studies automatic tools for the integration of existing knowledge and given observations to enriched knowledge applicable to future observations. In particular general techniques that are useful for a set of applications are of interest. In order to relate objects, they have to be considered in a common representation. Should this representation be postulated by the available knowledge, or can it be learned from observations?

- Copyright Abstruse Goose

Before learning is possible, observations have to be collected. So at least sensors have to be chosen and relevant objects have to be found. Other common types of knowledge are class labels for some or all example objects, class prior probabilities, possible features or (dis)similarity measures, and sometimes even the family of probability distributions of some measurements.

Possible representations are feature spaces, aligned images of the same size, spectra, aligned time signals, graphs, strings, (dis)similarities, bags of features (words). The alignment of images and time signals are needed. Otherwise objects available somewhere in space or time can not be related. On the basis of a representation objects can be compared and classifiers between classes of objects can be trained.

Can such a representation be learned? Is it possible to find a good representation on the basis of a set of example objects? This is momentary a frequently studied topic, e.g. see the August issue of IEEE Trans. of Pattern Analysis and Machine Intelligence. Popular approaches are deep learning (finding a good vector space) and metric learning (finding a good distance measure). See also a post on metric learning and consciousness..

The objects used for learning a representation may also be used for finding a classifier. These two processes may thereby also be integrated and merged into a single generalization procedure. This is the same observation as made in the seventies w.r.t. feature selection and feature extraction. If this is done by the same objects it can be considered as a part of the classification procedure. It only makes sense to consider separate procedures if in between a human expert can make a decision (e.g. on some settings of the classifier) which cannot be optimized. This is, however, a step that most researchers in the field definitely want to automate as well, certainly after they already tried to learn the representation automatically.

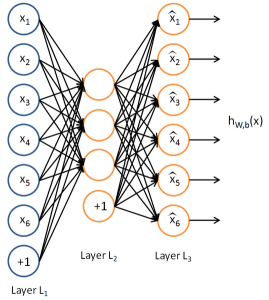

Autoencoder network used for deep learning. See the tutorial.

So learning a representation is in fact a step taken by an advanced classifier. An example of such a classifier is the neural network. In the first layers a representation is built that is used for training a classifier in the top layer. For some researchers this is a reason to state that deep learning is in fact just a new name for the field of artificial neural networks.

A significant difference, however, between learning a representation and training a classifier is that the first may be based on unlabeled samples only ,while for the latter at least some labeled objects are needed. Learning by both, labeled as well as unlabeled samples, is called semi-supervised learning, which is thereby related to learning representations.

The above makes clear that learning a representation is a part of the generalization step in pattern recognition. This step can only be made on top of a proper representation (see the post on true representations). In one way or the other an initial representation is needed in which the process of generalization can be started. Obviously, this one has to be chosen by the human expert on the application or the pattern recognition analyst. This choice is crucial. It might be corrected by a large training set if it is not optimal, but has a sufficient flexibility. In case the available set of examples is small, a good representation is essential. This is an important topic of pattern recognition research: what are to proper representations in case of learning from small training sets? In that case, optimizing the representation or the training of an advanced, complex classifier is hardly possible without avoiding the bias that comes with overtraining.

Filed under: Representation