The Nearest Neighbor (NN) rule is a classic in pattern recognition. It is intuitive and there is no need to describe an algorithm. Everybody who programs it obtains the same results. It is thereby very suitable as a base routine in comparative studies. But who invented it? Marcello Pelillo looked back in history and tried to give an answer.

The nearest neighbor rule

The NN rule for classification is a very special rule. In its vanilla form it stores all examples. There is no training and thereby no density information used. It entirely relies on the given examples and a user defined distance measure. Classification is based on a comparison with everything that has been stored.

Because no probabilistic information is used in the 1-NN rule, in contrast to almost all other classifiers, including the k-NN rule, it has the big advantage that the training set should not be necessarily based on a selective sampling. The teacher, i.e. the application expert who is responsible for designing the classifier, is allowed to use his knowledge for finding a good set of examples that represents the domain of interest and not the probability density function. Knowledge of the domain is usually better available than probabilistic knowledge and is difficult to be used otherwise.

A specific condition for using the rule is the availability of a proper distance measure. The chosen set of examples and the distance measure determine fully what will happen during classification. There is no correction is a training procedure in which highly probable and less probable errors are analyzed.

The NN rule is often used as a base procedure in benchmarking and comparative studies. As it is untrained it is expected that any trained rule that doesn’t beat it is useless for the application under study. The rule is very intuitive, but is sometimes surprisingly overlooked. For instance in the early days of the neural network wave procedures have been used that demanded heavy training resulting in more complex and less accurate systems than would be obtained by the NN rule.

History

A question recently raised in a very interesting paper by Marcello Pelillo is who invented the NN rule. Pelillo refers often to the famous and beautiful Cover and Hart paper (1967). That paper shows what happens if a very large aselectively chosen training set is used. The result is beautiful (the classification error is smaller than twice the Bayes error), but these are exactly opposite the conditions for which the rule is of interest.

Before Cover and Hart the rule was mentioned by Nilsson (1965) who called it “minimum distance classifier” and by Sebestyen (1962), who called it “proximity algorithm”. Fix and Hodges in their very early discussion on non-parametric discrimination (1951) already pointed to the NN rule as well. The fundamental principle known as Ockham’s razor: “select the hypothesis with the fewest assumptions” can be understood as the NN rule for nominal properties. It is, however, not formulated in terms of observations. Ockham worked in the 14th century and emphasized observations above ideas.

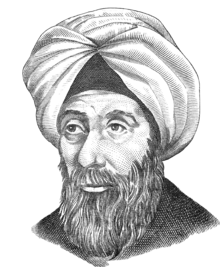

Alhazen

Before Ockham this was already done, as pointed out by Pelillo, by Alhazen (Ibn al-Haytham), a very early scientist (965 – 1040) in the fields of optics and perception. Pelillo cites several paragraphs from an English translation of one of his books (Alhacen’s Theory of Visual Perception. Philadelphia: American Philosophical Society, 2001) by Mark Smith. The most crucial ones are:

That universal forms of visible aspects occur in the soul and are impressed in the imagination is due to the fact that there are certain kinds of visible characteristics, such as form or shape, according to which individuals of a certain kind will be identical, whereas those individuals vary according to [other] particular characteristics that are perceived by the sense of sight. (p. 518)

That universal forms of visible aspects occur in the soul and are impressed in the imagination is due to the fact that there are certain kinds of visible characteristics, such as form or shape, according to which individuals of a certain kind will be identical, whereas those individuals vary according to [other] particular characteristics that are perceived by the sense of sight. (p. 518)

It is clear that this is not about a machine but describes what happens during perception in a human observer. Note that there is in fact a training described as a universal form (class mean or class mode?) is found. This is done by recalling the original objects, the particular forms:

It is thus through the effect of perceiving individuals of the same kind by sight that the universal form of their kind will recur [in the soul] along with the various particular forms of those individuals. (p. 518)

Finally the discrimination is described by a procedure that can be understood as a nearest neighbor search. The class membership of the nearest neighbor is used for new, visualized object.

Hence, when sight perceives some visible object, the faculty of discrimination immediately seeks its counterpart among the forms persisting in the imagination, and when it finds some form in the imagination that is like the form of that visible object, it will recognize that visible object and will perceive what kind of object it is. (p. 519)

As there is a process of generalization (training) involved, by determining the universal form, this rule is in fact a mixture of the nearest neighbor and something like the nearest mean rule. By using both, the particular forms as well as the universal form, Alhazen follows Aristotle as well as Plato. See our discussion on these two approaches in relation with the foundation of pattern recognition.

A final, critical comment. It seems that Alhazen suggests that the universal form and the particular forms on which it is based are stored together, in a similar way. The universal form, however, may be of an entirely different nature and may require a different distance measure. If this measure has to be derived during ‘training’ the entire procedure becomes much more complicated than a nearest neighbor rule. We will not know, as Alhazen’s description seems to be mainly verbal, without any more formal algorithm or equations. There was no direct need for that as he described human perception and not a machine.

Filed under: Classification • History